Azure functions, also called Azure function apps, are a great way to build simple components – functions – and run them in the cloud (also called “serverless computing” or FaaS). Those functions are triggered via timer, http trigger, webhooks and many others. The functions itself can be implemented in one of the following languages: C#, F#, JavaScript/Node.js, PHP, Powershell, Python, Batch, Bash.

It’s important to mention, that functions have a timeout of 5 minutes – so if you have an endless running job, then you should go for webjobs. The idea behind Azure functions is, that you execute just a small piece of code. That’s why there is this timeout. Running those small pieces is very cheap. The first 1.000.000 executions and the first 400.000 GB/s of execution are for free. 400.000 GB/s means: Let’s assume you have a memory size for your function app of 512 MB: 400.000*1024 / 512 = 800.000 seconds are for free. So you can execute your function 1 Mio times with an average execution time of 1.25 seconds and it’s still free.

I will use the Azure functions to build two “applications”/functions. One of them will read data from my RSS feed and write it to my table storage. The second one will read all my upcoming events from https://www.meetup.com/ and create an iCal file out of it so that I can add it to my calendar.

In this blog post, I will create the Azure function app with Powershell to read data from my RSS feed and write it to the table storage. I already did the coding part in one of my previous blog posts, so this post will focus on the Azure function.

Create the Azure function app

If you just want to try out Azure functions, the easiest way is to go to https://functions.azure.com/try. It allows to create an Azure function and try it for free – but only for 60 minutes. Unfortunately it only allows to create C# and JavaScript functions and it has a limited functionality.

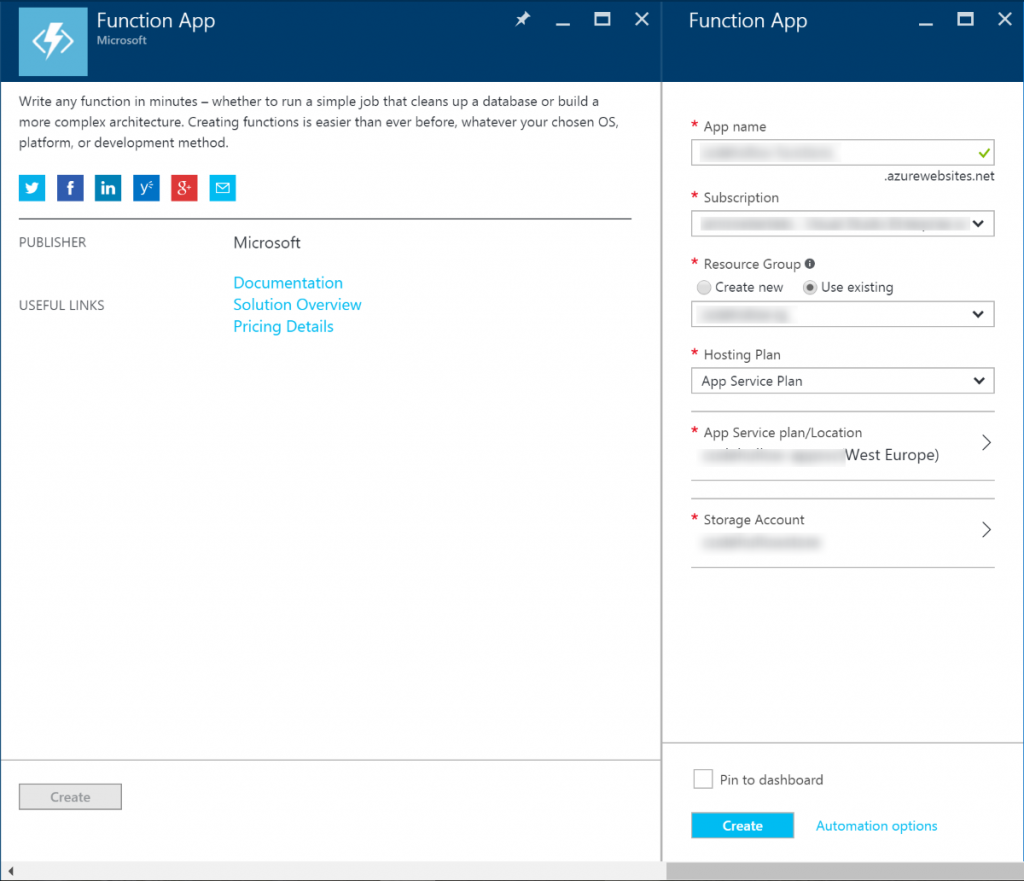

We want all functionality and it should be running regularly the next years, so we’ll create an Azure function in the Azure portal:

I’ll use the Hosting plan “App Service Plan” because I already have a running app service. You can also choose dynamic. If you just want to try it out, it makes sense to use dynamic and to create a new resource group and a new storage account because it allows you to delete all created things by deleting the resource group.

I want to use those functions for a longer period, so I’ll create it in one of my existing resource groups and I’ll also reuse one of my storage accounts.

After the creation, open the function app. If it shows that there is a new version of Azure functions available, upgrade it by clicking the link, scrolling to the top and pressing update.

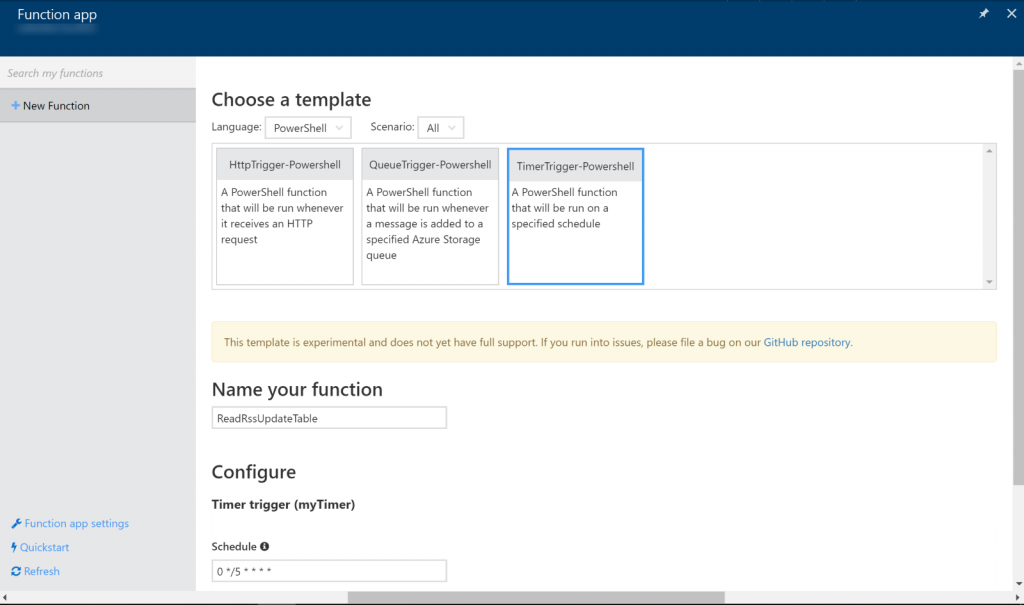

Create a new Powershell function

Click the new function button on the left side and change the filter for language to Powershell. I’ll create a time triggered function and will therefore use the template “TimeTrigger-Powershell”.

The default schedule: 0 */5 * * * * means every 5 minutes. We can change that later on to 0 0 0 * * * which means daily. For more information about CRON expressions, check Azure Functions – Time Trigger (CRON) Cheat Sheet

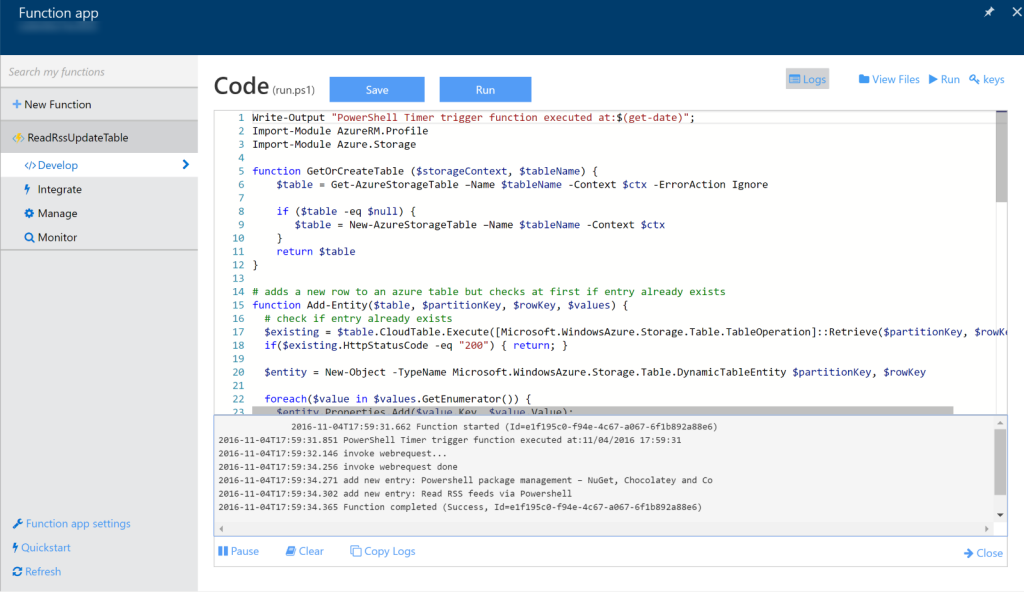

After that, I’ll collect the code from the blog post Working with Azure Table Storage and Excel and add it to the Powershell function:

Write-Output "PowerShell Timer trigger function ReadRssUpdateTable executed at:$(get-date)";

Import-Module AzureRM.Profile

Import-Module Azure.Storage

function GetOrCreateTable ($storageContext, $tableName) {

$table = Get-AzureStorageTable –Name $tableName -Context $ctx -ErrorAction Ignore

if ($table -eq $null) {

$table = New-AzureStorageTable –Name $tableName -Context $ctx

}

return $table

}

# adds a new row to an azure table but checks at first if entry already exists

function Add-Entity($table, $partitionKey, $rowKey, $values) {

# check if entry already exists

$existing = $table.CloudTable.Execute([Microsoft.WindowsAzure.Storage.Table.TableOperation]::Retrieve($partitionKey, $rowKey))

if($existing.HttpStatusCode -eq "200") { return; }

$entity = New-Object -TypeName Microsoft.WindowsAzure.Storage.Table.DynamicTableEntity $partitionKey, $rowKey

foreach($value in $values.GetEnumerator()) {

$entity.Properties.Add($value.Key, $value.Value);

}

Write-Output ("Add new entry: " + $values["title"])

$result = $table.CloudTable.Execute([Microsoft.WindowsAzure.Storage.Table.TableOperation]::Insert($entity))

}

# define the storage account and context.

$storageAccountName = "[STORAGE ACCOUNT NAME]"

$storageAccountKey = "[STORAGE ACCOUNT KEY]"

$tableName = "testdata"

$ctx = New-AzureStorageContext $storageAccountName -StorageAccountKey $storageAccountKey

$table = GetOrCreateTable $ctx $tableName

Write-Output "Read data from feed..."

$chfeed = [xml](Invoke-WebRequest "https://arminreiter.com/feed/" -UseBasicParsing)

Write-Output "Reading data done"

foreach($entry in $chfeed.rss.channel.item) {

$values = @{ "URL" = $entry.link;

"Title" = $entry.title;

"PubDate" = [DateTime]::Parse($entry.pubDate) }

$rowKey = $entry.'post-id'.InnerText.PadLeft(5, '0') # add 0 at beginning for sorting

Add-Entity $table "CodeHollow" $rowKey $values

}

The code is nearly the same as in the mentioned blog post, I just changed the following things:

- I added some Write-Output statements so that I can see the progress in the log.

- I used the “short” version of the script which reads the last 10 blog posts (instead of all) because I guess that I’ll never have more than 10 new blog posts withing 24 hours.

- During my first run, I received the error: Invoke-WebRequest : The response content cannot be parsed because the Internet Explorer engine is not available, or Internet Explorer’s first-launch configuration is not complete. Specify the UseBasicParsing parameter and try again. . Therefore I added the parameter -UseBasicParsing to the Invoke-WebRequest

And that’s the result:

I now have an Azure function that runs daily and writes some basic data of my blog posts to an Azure table storage (which I access from Excel). That’s awesome.

My first function is running. My next blog post (Working with Azure functions (part 2 – C#)) is about a C# function that reads all my upcoming events from all my groups from the https://www.meetup.com API and creates an iCal file out of it. This ics file can be added to your favorite calendar program.

No responses yet